Have you ever seen a flower that you want to know the name of, or wanted to identify a new bird near your bird feeder? Or have you seen a jacket that you liked and wondered where to buy it? Google Lens is an App that turns your phone into a search engine to answer all of these questions and more.

How you use Google Lens is very simple. But the technology behind it is anything but simple and is based on a revolutionary new machine learning approach, termed a ‘neural network’, that is modeled after the human brain. To use Google Lens, simply download the app from the Google Play store on your Android phone, then point your phone’s camera at what you want to identify. A circle will appear on anything that Google Lens recognizes in the image. Click on that circle and the search results will pop up. It’s that easy.

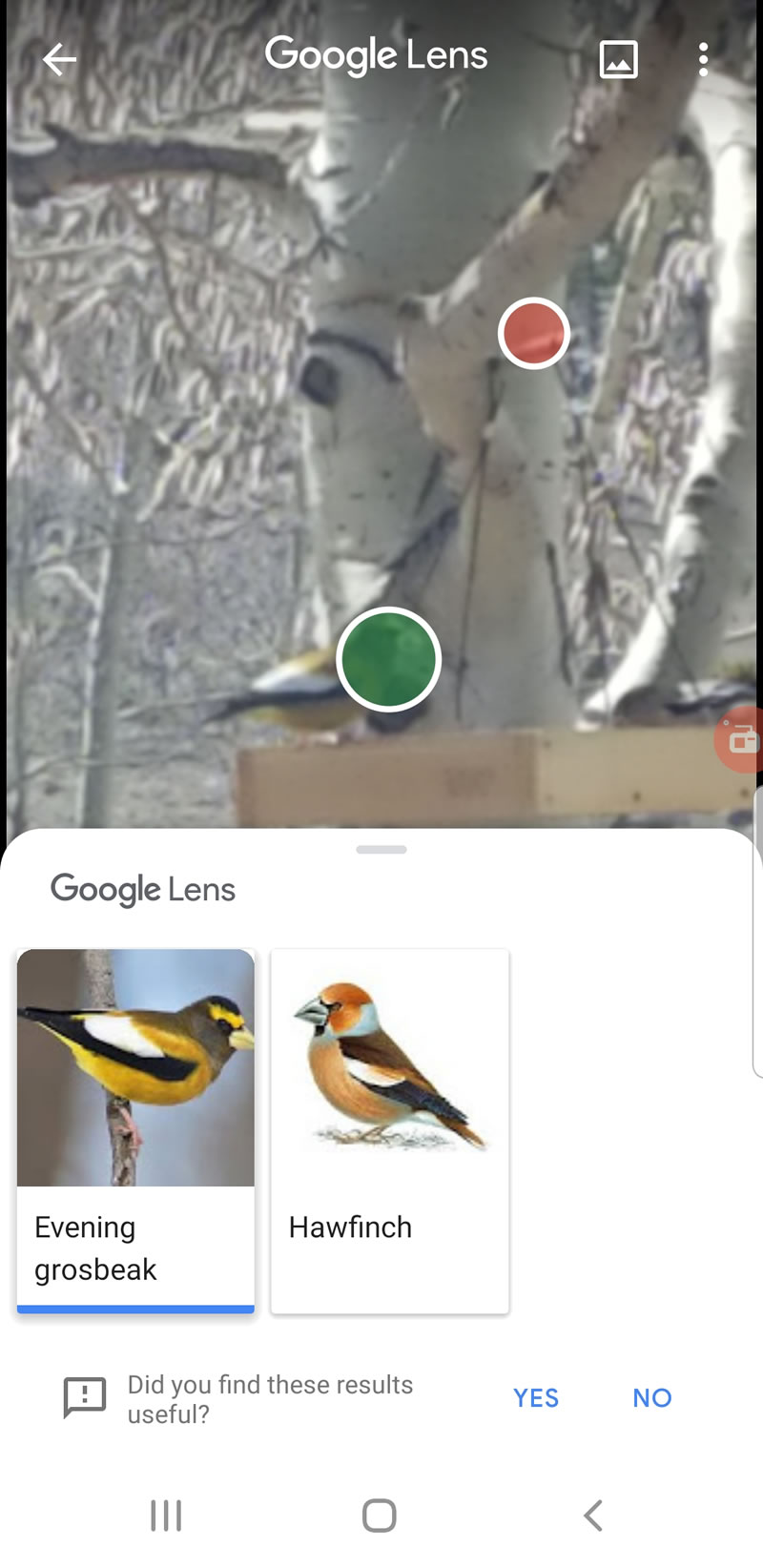

I took a couple of pictures as examples. This screenshot from my phone shows Google Lens identifying a Grosbeak on a tree near my bird feeder.

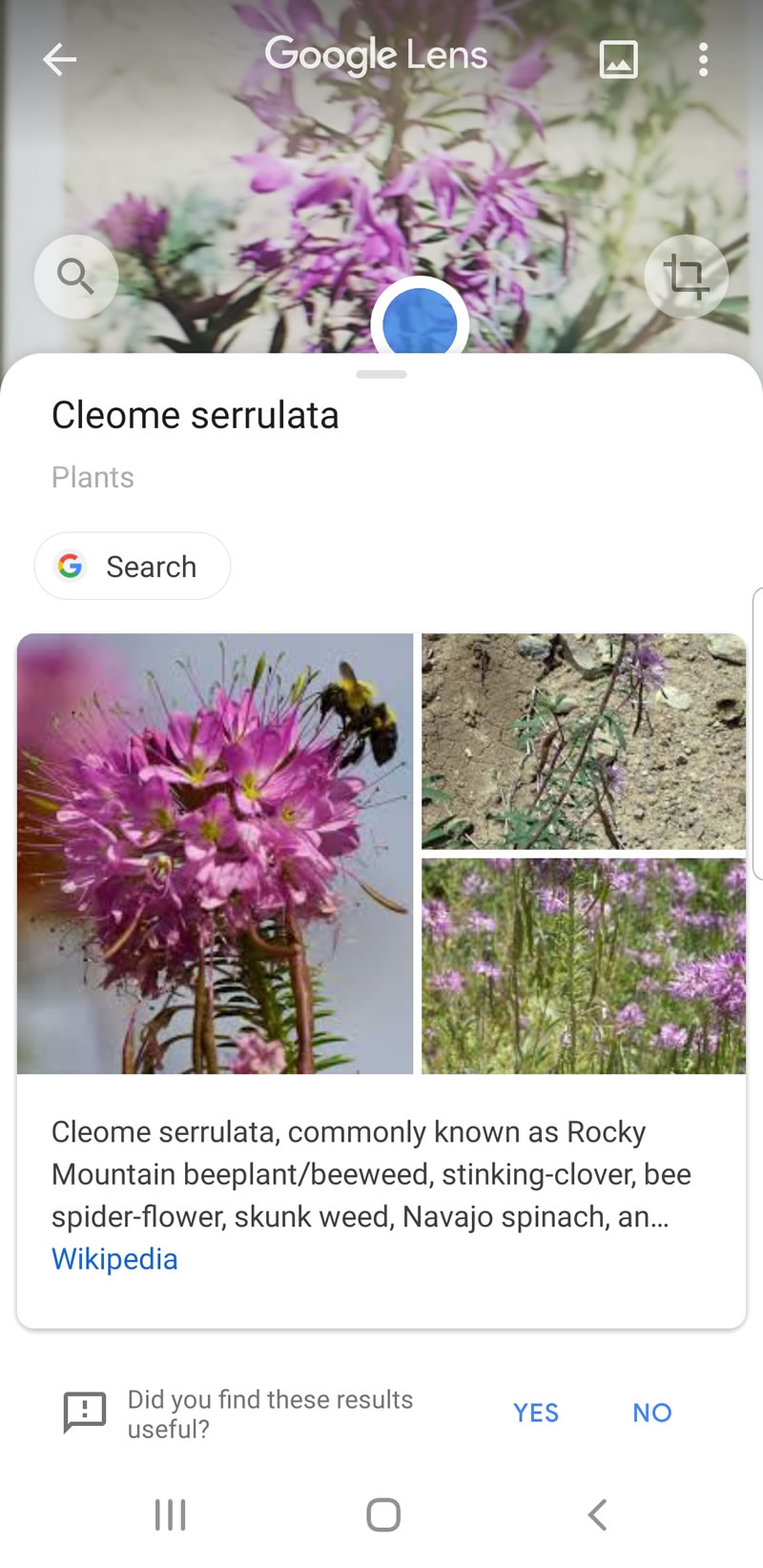

Then a friend sent me a picture of a plant which I displayed on my computer and pointed my phone at the screen. Google Lens popped up with the reference of “Cleome serrulata” with a link to the Wikipedia description which included this wildflower’s more common name of “Rocky Mountain Bee Plant.”

So how does Google Lens work? Neural networks are a new technology that allows a computer to literally “learn” from being trained using millions and millions of tagged images.

In the Google Lens examples I have described, for the neural network to ‘recognize’ the bird in the image, it had to be first “trained” by playing hundreds of images through its network, each tagged as an “Evening Grosbeak” to allow it to associate that image pattern with that particular name for the image. The ability of the neural network to identify an image is directly based on how many images with that name it was given from which to learn.

The Google Lens image search is so effective because the Google neural network it uses was trained using the hundreds of millions of queries that came through Google’s Image Search engine, along with the thousands of images that are returned to each user when they search. When you click and open an image because it matches what you were searching for, you are helping to “train” Google’s neural network. That, in turn, helps Google Lens to be able to recognize that image – pretty cool huh!

While the Google Lens App is not 100 percent accurate all of the time, part of the fun is using it to find out what it does and does not recognize. In addition to recognizing plants and animals it will also currently read bar codes (check out different prices for items while you are shopping), translate text (just point it at instructions in another language when you are in a foreign country) or recognize text on a sign and use it to automatically open a web site or call a phone number.

As the neural networks being built by Google continue to evolve, you can expect the Google Lens App to be able to recognize more and more objects, adding more of these type of search features that you can use from your phone camera. Happy hunting all of you Android users!

The Galloping Geek

You actually make it seem really easy with your presentation but

I find this matter to be actually something that I feel I would

never understand. It kind of feels too complicated and very large for

me. I’m taking a look ahead to your next submit, I will attempt to get the dangle of it!

I am not very excellent with English but I get hold this

really leisurely to translate.

Howdy very nice blog!! Man .. Beautiful .. Amazing .. I’ll bookmark your web site

and take the feeds additionally…I’m satisfied

to search out a lot of useful info right here in the

submit, we need develop more strategies

in this regard, thank you for sharing.

After looking at a number of the articles on your

web site, I seriously appreciate your way of blogging.

I bookmarked it to my bookmark website list and will be checking back in the

near future. Please check out my web site as well

and tell me your opinion.

I don’t even know how I ended up here, but I thought this post was good.

I don’t know who you are but certainly you are going to a famous blogger

if you aren’t already 😉 Cheers!