Facial recognition is really bad at recognizing faces. As The Denver Post recently reported: “A test done by a grassroots campaign to ban facial recognition technology in Denver found it falsely matched Denver City Council members to people in the sex offender registry. The people behind an initiative to ban facial recognition surveillance in Denver, said they believe the technology isn’t ready for law enforcement.”

“A big concern that our group has is the proliferation of false positive responses that this technology puts out there,” said committee member Connors Swatling. Swatling ran a test with Amazon’s ‘Rekognition’ software. He compared the photos of Denver City Council members to about 2,000 photos from the Denver County Sex Offender Registry. It took him three days to run the data.

“The results we got back were pretty astounding,” Swatling said. “In some cases, as many as four false positives from a pool of 2,000 images, which is a very small pool.”

Swatling said Council member Chris Hinds’ photo falsely matched with four different registered sex offenders. Their crimes ranged from sexual assault of a child to criminal attempt sexual assault on a child. His test found nine council members had photos that matched with someone in the sex offender registry. In some cases, the software was 92% confident the photos were a match.

“There are ways this technology can do a lot of good, but it’s not ready to be implemented by Denver municipal agencies just yet,” Swatling said.”

What is even more troubling is that someone has claimed that he and his company have solved these problems. And they’ve done it by scraping pictures of people off of social and digital media sites. Scraping is using machine learning programs to go and search for data using a set of parameters and once the data is found it is grabbed and returned to the person who initiated the search.

Scraping imagery from social and digital media sites is also a violation of almost every social and digital media platforms’ and companies’ terms of service. What could make this even worse? The guy who now has more than three billion people’s digital pictures just handed them over to law enforcement. Not because warrants were issued, not because a subpoena was issued, but because he can make money from doing it.

From The New York Times:

“Until recently, a fellow named Hoan Ton-That’s greatest hits included an obscure iPhone game and an app that let people put Donald Trump’s distinctive yellow hair on their own photos.

Then Ton-That — an Australian techie and onetime model — did something momentous: He invented a tool that could end your ability to walk down the street anonymously. He provided it to hundreds of law enforcement agencies, ranging from local cops in Florida to the F.B.I. and the Department of Homeland Security.

His tiny company, Clearview AI, devised a groundbreaking facial recognition app. You take a picture of a person, upload it and get to see public photos of that person, along with links to where those photos appeared. The system — whose backbone is a database of more than three billion images that Clearview claims to have scraped from Facebook, YouTube, Venmo and millions of other websites — goes far beyond anything ever constructed by the United States government or Silicon Valley giants.

Federal and state law enforcement officers say that while they have only limited knowledge of how Clearview works and who is behind it, they have used its app to help solve shoplifting, identity theft, credit card fraud, murder and child sexual exploitation cases.

Until now, technology that readily identifies everyone based on his or her face has been taboo because of its radical erosion of privacy. Tech companies capable of releasing such a tool have refrained from doing so. In 2011, Google’s chairman at the time said it was the one technology the company had held back because it could be used “in a very bad way.”

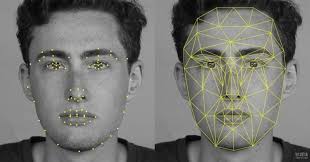

Facial recognition technology is not always accurate at correctly identifying faces. Image by Rapid API.

Some large cities, including San Francisco, have barred police from using facial recognition technology. But without public scrutiny, according to the company, more than 600 law enforcement agencies have started using Clearview in the past year. It declined to provide a list. The computer code underlying its app, analyzed by The New York Times, includes programming language to pair it with augmented-reality glasses; users would potentially be able to identify every person they saw. The tool could identify activists at a protest or an attractive stranger on the subway, revealing not just their names but where they lived, what they did and whom they knew.

What could possibly go wrong?

Begin with the fact that Ton-That advertised his services as being available for use to influence elections. Add that he sold this technology to white supremacist, anti-Semite Paul Nehlen, who attempted to run for Speaker Paul Ryan’s seat in Wisconsin, until the Wisconsin state Republican Party rightly kicked him out of the party for his egregious views. Then add that he has cozied up to alt-right, Holocaust denier Chuck Johnson, who was banned from Twitter and with whom the Capitol Police had to intervene because he was stalking Speaker Boehner.

From Buzzfeed: “Originally known as Smartcheckr, Clearview was the result of an unlikely partnership between Ton-That, a small-time hacker turned serial app developer, and Richard Schwartz, a former adviser to then–New York mayor Rudy Giuliani. Ton-That told The Times that they met at a 2016 event at the Manhattan Institute, a conservative think tank, after which they decided to build a facial recognition company.

The following February, Smartcheckr LLC was registered in New York, with Ton-That telling The Times that he developed the image-scraping tools while Schwartz covered the operating costs. By August that year, they registered Clearview AI in Delaware, according to incorporation documents.While there’s little left online about Smartcheckr, BuzzFeed News obtained and confirmed a document, first reported by the Times, in which the company claimed it could provide voter ad micro-targeting and “extreme opposition research” to Paul Nehlen, a white nationalist who was running on an extremist platform to fill the Wisconsin congressional seat of the departing speaker of the House, Paul Ryan.

A Smartcheckr contractor, Douglass Mackey, pitched the services to Nehlen. Mackey later became known for running the racist and highly influential Trump-boosting Twitter account Ricky Vaughn. Described by the Huffington Post as “Trump’s most influential white nationalist troll,” Mackey built a following of tens of thousands of users with a mix of far-right propaganda, racist tropes, and anti-Semitic cartoons. MIT’s Media Lab ranked Vaughn, who used multiple accounts to dodge several bans, as one of the top 150 influencers of the 2016 presidential election — ahead of NBC News and the Drudge Report.”

Right now the only thing standing between significant numbers of U.S. Federal, state, and local law enforcement officers (perhaps under the guise in facial recognition use to identify potential suspects) gaining access to pictures of your face, without any legal justification for getting the pictures — is whether or not Mr. Ton-That’s scraping algorithms have collected every picture of you that’s ever been posted on line. Current federal and state law and regulations, as well as local ordinances, do not really address this. There is almost no existing protection for any of us, from whatever Mr. Ton-That decides he wants to use our pictures for in pursuit of his personal profit.

The only real deterrent, which does not seem to be doing any actual deterring, is the potential that Facebook or Twitter or YouTube (Google) or Vimeo, etc. might sue Mr. Ton-That for violating their terms of service. Right now Mr. Ton-That, based on pictures of you posted on the Internet, knows if you’ve been sleeping, he knows if you’re awake, he knows if you’ve been bad or good, so… Well you get the idea and it isn’t a pleasant one!

Recent Comments